- #Github python text cleaner bad sentences install#

- #Github python text cleaner bad sentences code#

- #Github python text cleaner bad sentences download#

- #Github python text cleaner bad sentences free#

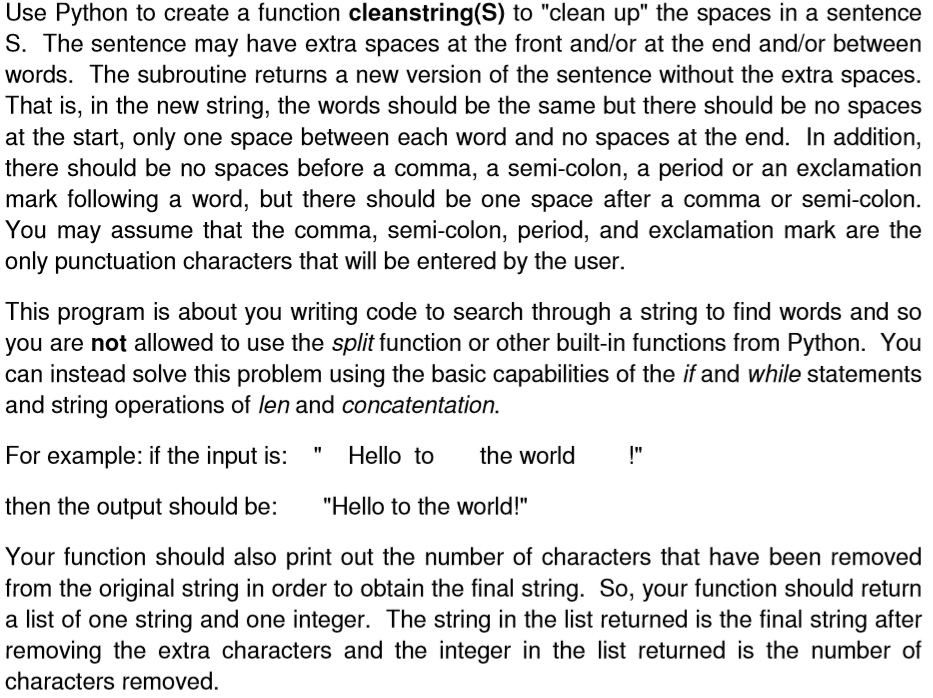

If you don't like the output of clean-text, consider adding a test with your specific input and desired output.

#Github python text cleaner bad sentences code#

Pull requests are especially welcomed when they fix bugs or improve the code quality. If you have a question, found a bug or want to propose a new feature, have a look at the issues page.

#Github python text cleaner bad sentences install#

Pip install clean-text from cleantext.sklearn import CleanTransformer cleaner = CleanTransformer ( no_punct = False, lower = False ) cleaner. There is also scikit-learn compatible API to use in your pipelines.Īll of the parameters above work here as well.

#Github python text cleaner bad sentences free#

If you need some special handling for your language, feel free to contribute. It should work for the majority of western languages. So far, only English and German are fully supported. Look at the following script: import spacy sp spacy.load ( 'encorewebsm' ) allstopwords sp.Defaults.stopwords text 'Nick likes to play football, however he is not too fond of tennis.

#Github python text cleaner bad sentences download#

For this, take a look at the source code. python -m spacy download en Once the language model is downloaded, you can remove stop words from text using SpaCy. You may also only use specific functions for cleaning.

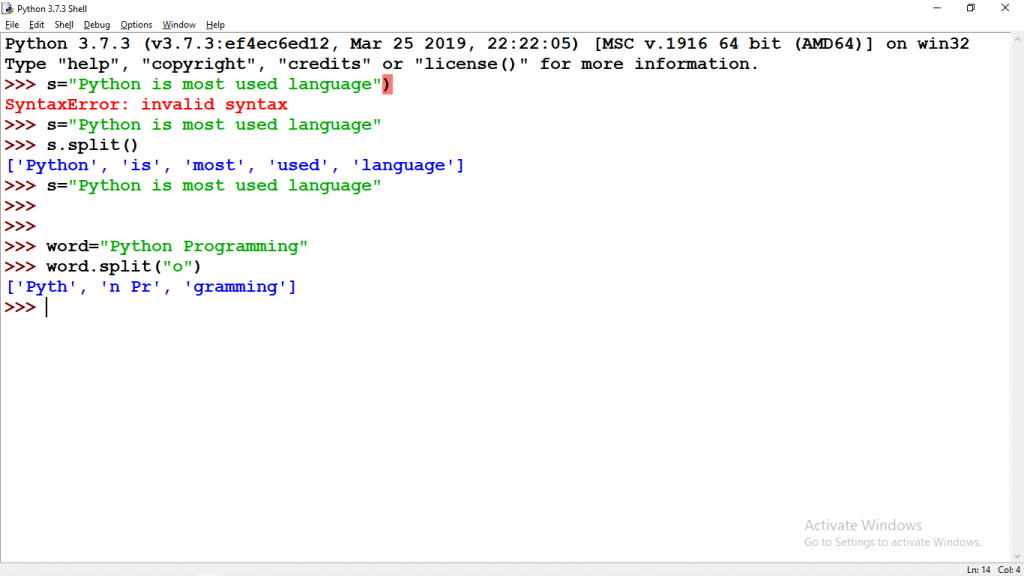

"you are right ", replace_with_email = "", replace_with_phone_number = "", replace_with_number = "", replace_with_digit = "0", replace_with_currency_symbol = "", lang = "en" # set to 'de' for German special handling )Ĭarefully choose the arguments that fit your task. Into this clean output: A bunch of 'new' references, including (). For instance, turn this corrupted input: A bunch of \\u2018new\\u2019 references, including (). Preprocess your scraped data with clean-text to create a normalized text representation. User-generated content on the Web and in social media is often dirty. Returns, 'this is a sample text to clean' There are other libraries available which you can use as well. clean ( 'This is A s$ample !!!! tExt3% to cleaN566556+2+59*/133', extra_spaces = True, lowercase = True, numbers = True, punct = True ) We can correct these by using the autocorrect library for python. Stop Word Any word like (is, a, an, the, for) that does not add value to the meaning of a sentence.

We will only use the word and sentence tokenizer Step 2: Removing Stop Words and storing them in a separate array of words. clean_words ( "your_raw_text_here", clean_all = False # Execute all cleaning operations extra_spaces = True, # Remove extra white spaces stemming = True, # Stem the words stopwords = True, # Remove stop words lowercase = True, # Convert to lowercase numbers = True, # Remove all digits punct = True, # Remove all punctuations reg : str = '', # Remove parts of text based on regex reg_replace : str = '', # String to replace the regex used in reg stp_lang = 'english' # Language for stop words ) Examples import cleantext cleantext. There are three main tokenizers word, sentence, and regex tokenizer. To choose a specific set of cleaning operations, cleantext. To return a list of words from the text, cleantext. basic as looking for keywords and phrases like marmite is bad or marmite is good. We can use a Python 3 package called ftfy, or ‘fixes text for you’, which is designed to deal with these encoding issues. To return the text in a string format, cleantext. From a sample dataset we will clean the text data and explore what. For example, stemming of words run, runs, running will result run, run, run)Ĭleantext requires Python 3 and NLTK to execute.

(Stemming is a process of converting words with similar meaning into a single word. ( Stop words are generally the most common words in a language with no significant meaning such as is, am, the, this, are etc.) This module can be used to replace keywords in sentences or extract keywords from sentences.

0 kommentar(er)

0 kommentar(er)